How Hackers Use Artificial

Intelligence to Outsmart You

The Rise of AI in Cybercrime

Artificial intelligence is the great equalizer in cybersecurity—and not necessarily in a good way. For years, we’ve celebrated AI as a tool for defense, powering next-generation firewalls and threat detection systems. But the same technology is now in the hands of our adversaries, and they are using it with alarming efficiency.

Hackers are no longer just individuals typing cryptic commands in dark basements. They’re running prompts, automating reconnaissance, and letting machine learning models do the social engineering for them. We often talk about AI as a productivity multiplier, but here’s the uncomfortable truth: it’s also a crime multiplier. The same algorithms that help you summarize reports or build workflows can now be used to impersonate your CEO, write flawlessly believable phishing emails, and create malware that bypasses your antivirus.

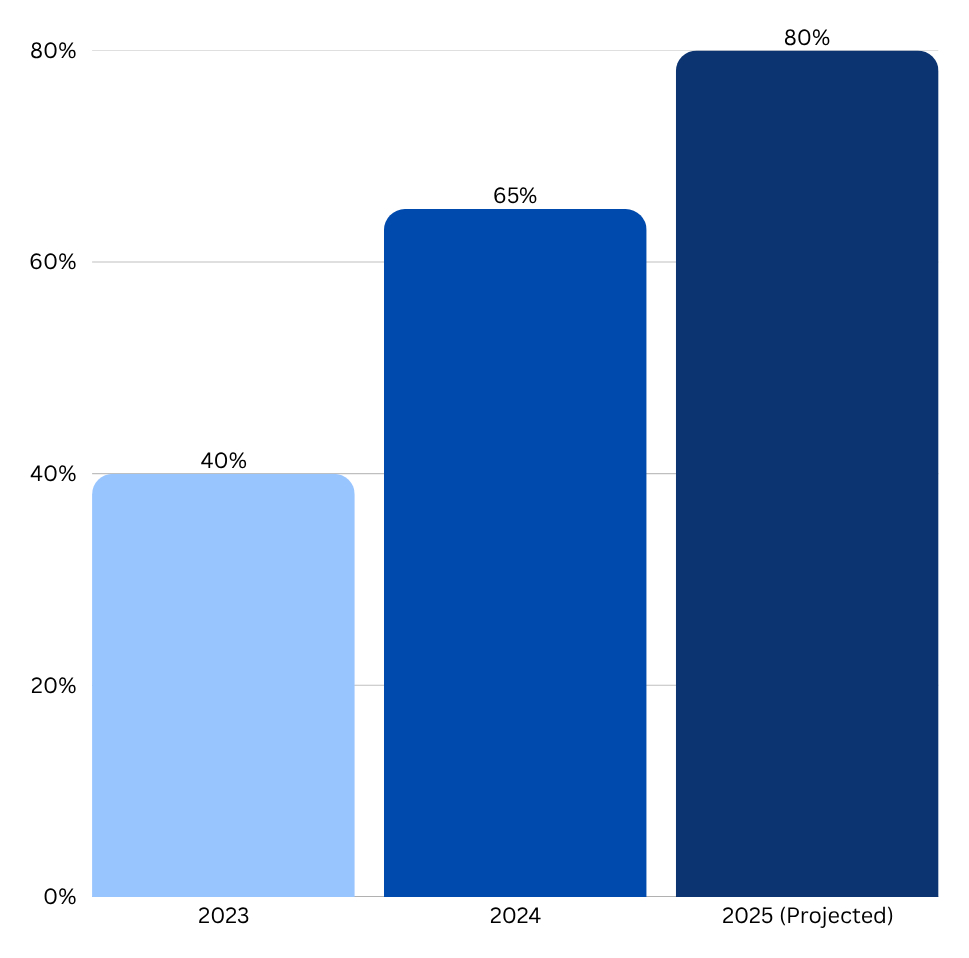

The scale of this shift is staggering. According to industry analysis, the use of generative AI in malicious cyber campaigns is rapidly increasing, turning what were once manual, time-consuming attacks into automated, scalable operations. This new reality demands a fundamental shift in how we approach security.

Projected Growth of AI- Assisted Cyber Incidents

Year-over-year increase

Data Source: Projections based on trends from the World Economic Forum Global Cybersecurity Outlook 2024.

How Hackers Use AI to Attack You

AI isn’t a single magic bullet for hackers; it’s a versatile toolkit that enhances every stage of a cyberattack. From initial access to final impact, machine learning is making attackers faster, stealthier, and far more convincing.

Hyper-Personalized Phishing

AI turns phishing from a clumsy, mass-market scam into a precision-guided art form. It scrapes LinkedIn profiles, company project timelines, and even internal communications to learn an individual’s writing style, tone, and common vocabulary. The result is a message that feels disturbingly authentic.

What used to be a generic “Dear Sir/Madam” email now reads exactly like something your finance lead would send on a busy afternoon:

“Hey, can you process this payment before 3 PM? We need it cleared to avoid shipment delays. — Maria”

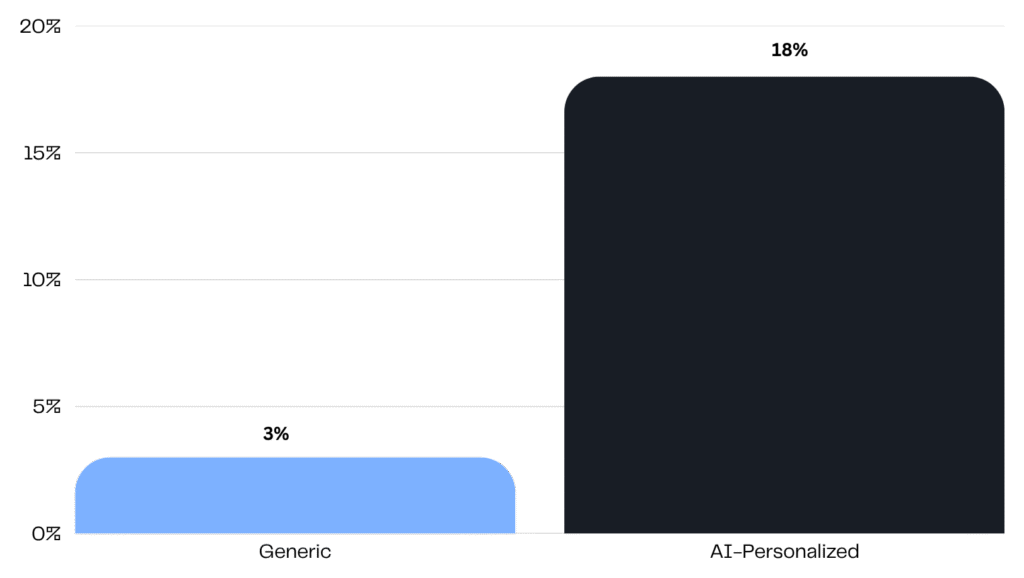

Same tone. Same urgency. Entirely fake. This is what happens when social engineering meets AI-generated empathy. These attacks, often called Business Email Compromise (BEC), are incredibly effective because they bypass technical defenses and target human trust directly. The success rate of such hyper-personalized attacks is significantly higher than that of traditional phishing campaigns.

Deepfakes and Voice Cloning

Deepfake technology has moved beyond memes and entertainment into the realm of high-stakes corporate fraud. Attackers can now clone the voice or face of a key executive to authorize fraudulent transactions or manipulate employees during “urgent” approval calls.

These voice clones can be generated using just a few seconds of recorded audio from a public interview, a conference call, or even a social media post. They sound real enough to bypass a gut check, especially when an employee is under time pressure or eager to be helpful.

Real-World Example: In a widely reported case from early 2024, a finance worker in Hong Kong was tricked into paying out $25.6 million after attending a video call with what he believed were his senior colleagues. In reality, everyone on the call except him was a sophisticated deepfake recreation. This incident, as documented by CNN, highlights a terrifying new frontier in social engineering.

AI-Generated Malware

AI isn’t just helping attackers talk their way in—it’s helping them stay hidden once they’re inside. Machine learning models are now used to create polymorphic and metamorphic malware, which constantly rewrites its own code to avoid detection by signature-based antivirus solutions. Each new variant looks like a completely novel piece of software, rendering traditional defenses almost obsolete without advanced behavioral analysis.

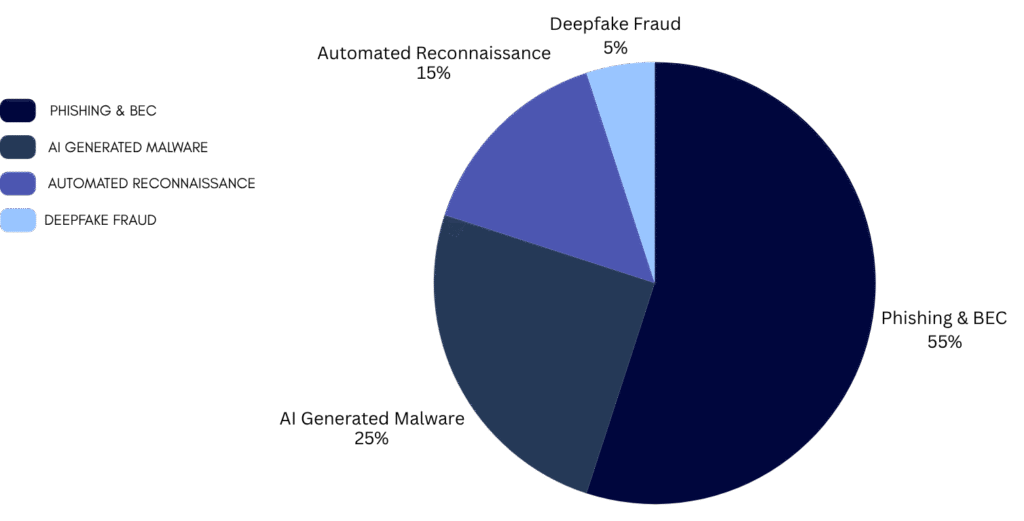

Think of it like a shape-shifting virus—it doesn’t just break into the system once; it continuously learns and adapts to evade capture. This makes detection and remediation far more complex, as security teams are essentially chasing a moving target. Phishing and malware generation remain the most common applications of malicious AI, forming the backbone of modern cybercrime operations.

Distribution of Malicious AI Use Cases

Data Source: Estimated distribution based on analysis from Verizon’s 2024 DBIR and other industry reports.

Exploiting AI Tools Themselves

Here’s the often-overlooked threat: the AI tools your team uses can become attack vectors themselves. In the rush for productivity, employees often feed confidential data into public chatbots to summarize reports, draft proposals, or analyze code. That same data can leak through conversation logs, be used for model retraining by the service provider, or be exposed via API vulnerabilities.

Attackers don’t always need to “hack” you in the traditional sense. Sometimes, they just need to wait for your employees to inadvertently upload the company’s strategic blueprint, customer list, or source code into an insecure AI model.

The Real Problem: It’s Not Just Technology — It’s Process

Let’s face it—no cybersecurity solution is bulletproof if the processes surrounding it are fragile. AI-assisted attacks don’t just exploit software vulnerabilities; they exploit process vulnerabilities. They thrive on the same weak spots humans always have: haste, assumptions, and inconsistent approval logic.

That’s why defending against AI-driven threats requires more than better firewalls. It requires process intelligence—the kind that forces verification, records context, and enforces controls automatically.

An AI-generated voice clone of a CEO demanding an urgent wire transfer is only successful if your payment process allows for single-point-of-failure approvals based on a phone call. A hyper-personalized phishing email only works if there isn’t a rigid, multi-channel verification process for sensitive requests.

How BPM Solutions Can Protect Your Digital Assets

This is where Business Process Management (BPM) enters the picture—not as a buzzword, but as a real, operational shield. A well-designed BPM system turns your internal workflows into your first and strongest line of defense against AI-driven deception.

By embedding security directly into your everyday operations, a BPM framework hardens your organization from the inside out. It moves security from being an afterthought to being an intrinsic part of how work gets done. Here’s how it helps:

Verification Gates: For sensitive actions like large payments, system access requests, or critical data uploads, BPM enforces mandatory, multi-step verification. An urgent request from a “CEO” would automatically trigger a notification to a second approver through a separate channel, neutralizing the threat of a deepfake.

Automated Audit Trails: Every action within a managed process is logged automatically, creating an immutable record of who did what, when, and why. This provides real-time visibility, helps flag anomalies, and ensures complete accountability.

Role-Based Permissions: BPM ensures that employees only have access to the data and functions essential for their roles. This principle of least privilege minimizes the potential damage if an account is compromised.

Workflow Intelligence: Modern BPM systems can analyze process data to identify suspicious activity patterns—such as a payment request originating from an unusual location or an approval happening outside of normal business hours—and flag them for review before they escalate.

This isn’t about replacing humans with systems. It’s about making sure your processes don’t rely on perfect humans in an imperfect world.

Outsmarting AI with Structure, Not Fear

AI hacking isn’t science fiction—it’s already happening. But fear doesn’t have to drive your strategy; structure does. The rise of AI in cybercrime is an arms race, and you can’t afford to fall behind.

If attackers are using AI to scale deception, you can use process automation to scale trust and verification. If they’re using machine learning to adapt and evade, you can use workflow intelligence to detect anomalies and enforce compliance. And if they’re accelerating the threat, your BPM systems can accelerate your response.

In this new landscape, the best cybersecurity strategy isn’t just about building stronger walls—it’s about building smarter, more resilient systems from within.

Secure Your Business

with intelligent process design

Let’s design workflows that think before they act. By embedding security and verification into the DNA of your operations, you can build an organization that is resilient by design. Contact our team to learn how our BPM solutions can strengthen your operational defenses, secure your assets, and keep your people one step ahead—even when AI gets clever.

Contact Us

Please fill out the form below to get in touch.